Throughout the last 10 months, the news has been filled with headlines for every new COVID test:

“Nasal swab test 98% accurate!”, “new rapid blood test 90% accurate!”, and the latest one developed by Ellume:

But what does that mean, to be 95% accurate? Below, I will outline how they come up with these numbers, why they are bad, and why even the “better” numbers that scientists like to talk about are flawed.

The Numbers they tell you

When a headline says “X% accurate” it means: using the test on 1000 people, how many would get the correct result? In the case of the headline above, they claim 950 people would get the “right” result. “Right” here means people with COVID getting a positive result and people without the disease getting a negative result. 50 people would get the wrong result. “Wrong” meaning people with COVID getting the all-clear (negative test) and those without covid getting a positive result.

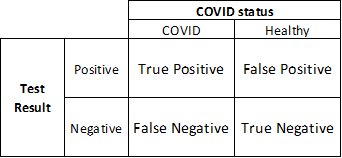

One major issue with that strategy is that it lumps both types of “right” together and both types of “wrong” together. In particular those two groups of “wrong” can have very different consequences. Let’s consider them.

- Beth has a mild cold and goes in for a test. The test comes back positive (wrong). We call this a False Positive. What do you think Beth does with this news? My bet: she starts quarantining, and immediately gets another test to confirm the results.

- Sam thinks her allergies are acting up, but in reality she has caught COVID. Her test comes back negative (wrong). We call this a False Negative. Relieved, Sam goes about her week as usual. Going to work. Infecting others.

The consequences of being wrong on Sam’s test are potentially very high. For Beth, they are fairly minor. Beth spent some extra time and energy quarantining when she didn’t need to. You could argue then that a COVID test’s ability to avoid Sam s is more important than its ability to avoid Beths. Avoiding False Negatives could be more important than avoiding False Negatives.

Going back to the headline, if those 50 “wrong” results are Beths, it’s not that big of a deal right? But what if they were all Sams?

The better numbers they tell you

So its clear that grouping these things together into one big X% ACCURATE label is flawed. What’s a better way? Sensitivity and Specificity.

The Sensitivity is the test’s ability to find positives. That is, positive test on someone who has COVID. AKA True Positive Rate.

Specificity is the test’s ability to find negatives. That is, negative test on someone who doesn’t have COVID. AKA True Negative Rate.

Don’t worry about why someone picked two such similar words. We’ll stick with True Positive and True Negative rates from here on. These two numbers are the two types of “right”. Paired with the False Positive and False Negative Rates, they provide a more whole picture of a test’s accuracy on a population, often presented this way:

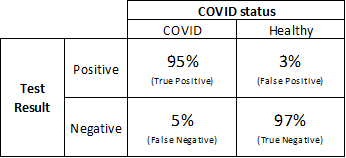

Ellume provided these rates in their research. They claim 95% True positive Rate and 97% True negative Rate. Adding these to the tables…

Great! So Ellume’s test was right for COVID-infected people 95% of the time, and right for healthy people 97% of the time. But wait, isn’t that… pretty good? So if I take this test it will be right at least 95% of the time?

Nope.

The question you want answered isn’t the same question the researchers answered

The scientists making the test want to know how good it is. So do you. They ask themselves, “When everyone in Dallas takes this test, how often is it right?”

However, I’d venture a bet that you care very little about Dallas. When you take a test, you ask yourself, “When I take this test, how often will it be right?”

The former question deals with many individuals, some with COVID and some without. The latter is concerned with only one individual: you.

To illustrate the consequences of this difference, imagine you take this test. Looking at the numbers above, the True Positive Rate is 95%. In their research, 95% of people with COVID got a positive test result. The False Positive Rate is 3%. Only 3% of healthy people got a positive test result.

You take this test and it comes back positive. You panic. But what are the chances you actually have COVID? 95%? A bit less? Surely at least in the 90s right? I mean, those numbers look strong!

What if I told you that the chance your test is right, the chance you actually have COVID, could be as low as 20%? Or less?

The Real Question you care about

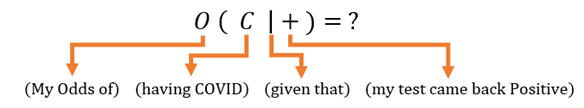

“What are the chances I have COVID, if my test comes back positive?”

The reason these questions can have such different answers is that the broader question, the question researchers care about, doesn’t take into account the how prevalent the disease is. If a random person takes a COVID test, the odds they have the disease are extremely low. There just aren’t that many people with COVID, compared to healthy people. So, even if an entire city was tested, most of those test results are going to be from healthy people, with very few results on COVID-positive people.

We can try to account for this. The number we’ve been given can help us answer that question but first we have to do a little math, and make some guesses. If this scares you please skip ahead. Here’s the real question again:

“What are the chances I have COVID, if my test comes back positive?”

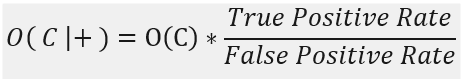

Expressed in shorthand, we can represent this question as:

The answer involves three numbers. Two of them, the True Positive Rate and the False Positive Rate, we already have. The final number we need is the Odds of having COVID (how prevalent the disease is).

O(C) is the tricky unknown here. Fair warning, many of the specific results below will be influenced by how off an estimate is made here. Nonetheless, let’s try. The CDC estimates for cases per day are around 150k per day (https://covid.cdc.gov/covid-data-tracker/#trends_dailytrendscases). If we assume that someone stays contagious for 14 days, then we figure there are around 150k*14 = 2.1 Million current COVID cases in the US.

The chances that a random person has COVID then would be 2.1 Million (# Cases) divided by the US population (about 332 Million), which is 0.6325 %. Just a little more than half a percent of the US population has COVID.

The Real Answer

Here’s the results of the math.

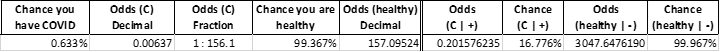

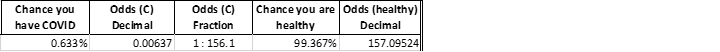

The left half here displays the estimate of O(C), the general likelihood of COVID before you take your test. This equation wants Odds instead, so we do the conversion. Also listed are your odds of being healthy (just the opposite).

On the right we have the answers we seek. Mechanically what we’re doing here is updating our general odds of having COVID with the additional information we get from the test. Highlighted is the answer to our question how likely am I to have COVID, if my test comes back positive? Chances are only about 17%. Not very high. Even if you take Ellume’s test and it comes back Positive (+), that comes out to only a 1 in 6 shot of having COVID. In fact, you could easily get multiple Positive test results in a row and still be healthy as a clam.

On the other hand, if your test comes back Negative (-) you can be pretty confident that you don’t have COVID. The chance your test was right, and you are healthy, is 99.967%.

Large assumptions

The biggest problem with the math above is that we really don’t know an accurate value of O(C), your odds of having COVID prior to taking a test. In fact, our estimate of these prior odds above overlooks a rather important point: people who take COVID tests are almost certainly more likely to have it than a random person off the street.

Sure, some people just take a test as part of a routine. Their job makes them or they got on a plane, or hell maybe they’re just paranoid. Statistically we would say that the likelihood those people take a test is independent from their likelihood of having COVID. For them, the math above is probably reasonably accurate.

But for many others, these two things are very much connected. People who are sick, displaying symptoms, will get a test. People who know they’ve been exposed to a COVID-positive colleague get a test. Instinctively we know that these folks must be more likely to have COVID than a random healthy person who hasn’t left their house in a month. Anything that makes you more likely to have COVID would update your prior odds O(C), and change that math.

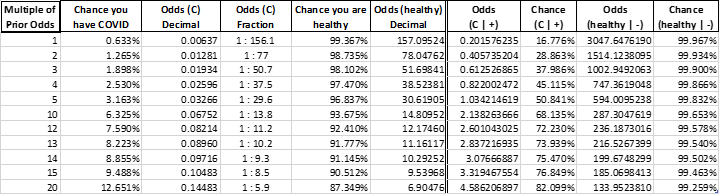

While we can’t know the real prior odds, we can look at a bunch of scenarios. I’ve added a new column on the left Multiple of Prior Odds. The first row, 1, is the same one we looked at before. For row one, your prior odds of having COVID are equal to those of a random person picked off the street.

Each row below shows the strength of the test if you were some number of times more likely to have COVID than the average Joe. Row 2 for example, says even if you were twice as likely as a typical person to have COVID, the chance that your positive test is correct is only about 29%.

It’s not until your prior odds go up by five times that the chances of Ellume’s test correctly identifying COVID are better than a coin flip (>50%).

Finally

When we throw around numbers in the 90 percents, it makes things sounds great. 95% is an A right? It paints a picture of “it’s good” when in reality that answer depends completely on the question you’re asking. A test can be 95 something and still be terrible at doing the thing you want. Or it can be great.

In the case of Ellume, the test is pretty good at some things. It’s not so good at others. But beware of absorbing numbers and applying them to questions you have in your head. Statistics are produced to answer very specific questions about a very specific situation. The questions they answer may not be what you think. Statistics also have the danger of being bite-sized and easy to pass around. Be skeptical of anything in a percent.

Finally, it’s important to point out that accuracy, sensitivity, the effectiveness of the test is not the only thing that matters. Different tests can be more or less invasive, more or less expensive. Some tests might be more difficult to administer and require more experience on the part of the technician administering it. Some tests might require experienced scientists interpreting blood work to even produce a result. There is value to be had in all of these. They probably all have a place.

“All models are wrong. Some models are useful.”